The AI PC Is Here (Again), But It’s the Illusions That Grabbed My Attention

Today’s AI headlines present a fascinating duality: on one hand, we saw the industry aggressively pushing the next wave of integrated AI hardware and software; on the other, we got a deep, philosophical look at what AI can tell us about our own perception. The story of AI today is less about big, single breakthroughs and more about integration—how the technology is quietly embedding itself into our physical hardware and cognitive understanding.

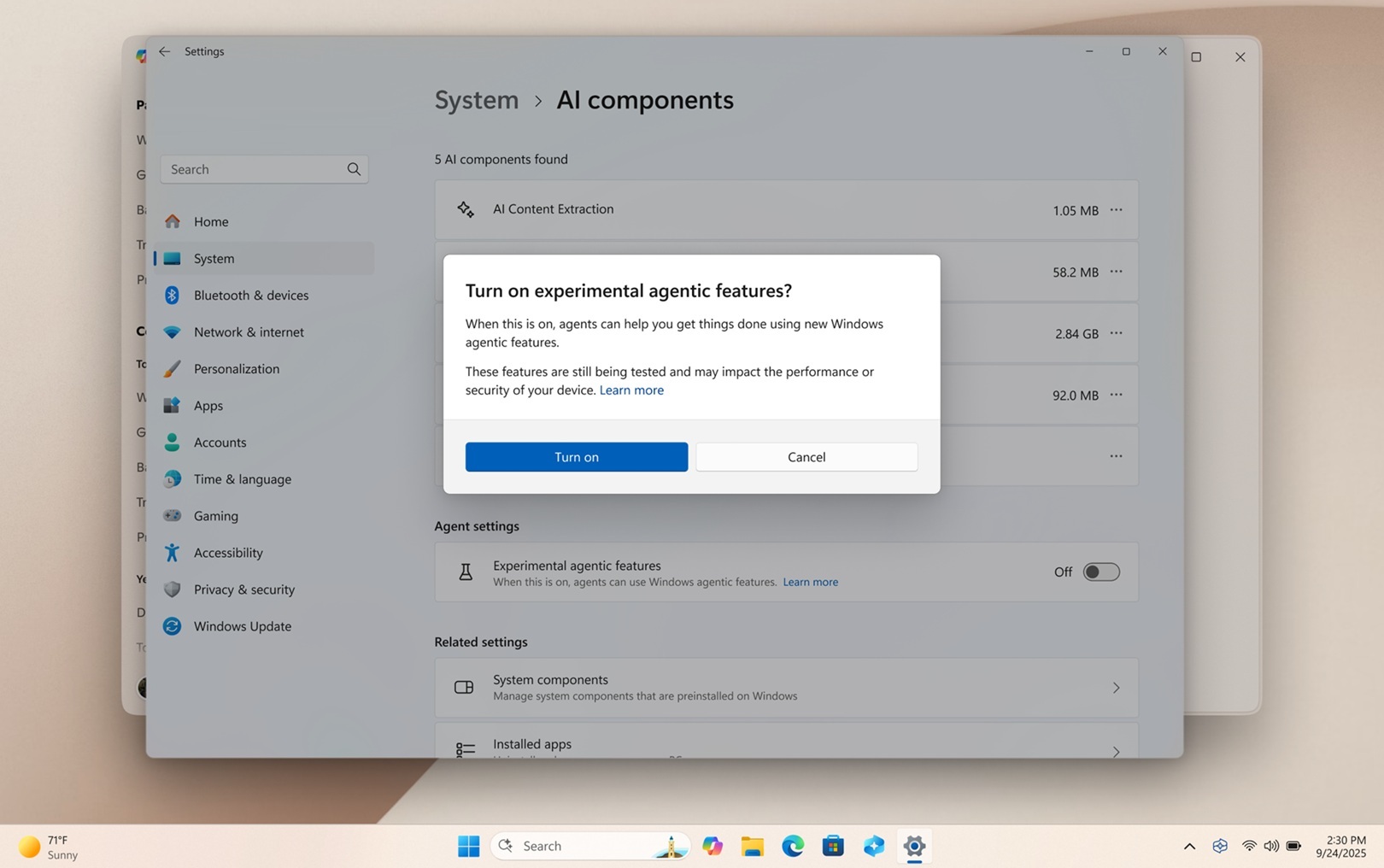

The biggest commercial drumbeat today came from the ongoing battle for the “AI PC” market. Microsoft is making it abundantly clear that if you aren’t upgrading to a Copilot+ PC, you are, in their view, opting out of the next generation of computing Microsoft says you should upgrade to Windows 11 AI PCs if you want to be prepared for the next generation of computing. This isn’t just about faster chips; it’s about shifting local processing—using specialized Neural Processing Units (NPUs)—from a novelty to a necessity for running core OS features. Adding fuel to this fire, AMD partners are showcasing new mini-PCs, such as the Thunderobot STATION, equipped with the latest “Strix Halo” processors and Ryzen AI MAX+ capabilities Thunderobot shows off cube-style Mini-PC equipped with Ryzen AI MAX+ 395 “Strix Halo”. This confirms that high-performance, locally executed AI is not a niche product anymore; it’s the new baseline for mainstream computing hardware.

This hardware push is already reflecting in user experience. We are seeing AI change fundamental workflows, particularly in communication. Google is previewing its AI Inbox, offering a glimpse into a future where Gmail is less about simple filtering and more about intelligent curation and response generation Google’s AI Inbox could be a glimpse of Gmail’s future. It promises to be a transformational shift for anyone drowning in correspondence.

Perhaps even more startling is the rise of voice-to-text AI that is so good it can entirely displace physical typing. One blogger noted that an advanced AI dictation app completely replaced their keyboard, streamlining their entire writing workflow I never expected an AI app to replace my keyboard, but I was wrong. Even industry legends are adopting these assistive technologies; Linus Torvalds, the creator of Linux, disclosed that he used “Vibe Coding” tools to help develop his latest open-source project, AudioNoise Linus Torvalds’ Latest Open-Source Project Is AudioNoise - Made With The Help Of Vibe Coding. Whether for email, coding, or blogging, the point is clear: AI is increasingly managing the mechanics of creation so we can focus on the ideas.

On the commercial front, as the market matures, we are seeing attempts to consolidate the confusing sprawl of services. The marketing of platforms offering a single lifetime purchase to “replace dozens of AI subscriptions” speaks directly to user frustration with the current fragmented landscape of specialized models and recurring fees Replace dozens of AI subscriptions with one $75 lifetime purchase. It’s a sign that the AI ecosystem is entering a phase of necessary commercial streamlining.

But the most compelling story of the day wasn’t about processors or productivity; it was about brains, both silicone and carbon. Researchers found that certain types of AI can be fooled by the same visual optical illusions that confuse the human brain AI can now ‘see’ optical illusions. What does it tell us about our own brains?. This isn’t just a fun parlor trick; it suggests that the fundamental processing shortcuts or inductive reasoning patterns used by highly advanced AI models mirror those hardwired into human perception. When an AI looks at the checkerboard shadow illusion and sees the same color mismatch that we do, it tells us something profound about how complex systems—biological or artificial—make sense of ambiguous reality.

Today’s news confirms that AI is no longer something you access on a remote server; it is an integrated layer of our reality. It lives in our new hardware, optimizes our communication, writes our code, and, perhaps most excitingly, offers a new window into understanding the imperfections and quirks of human cognition itself. The next generation of computing isn’t just faster—it’s more intimately intertwined with how we think and see.