The Dual Nature of AI: Creative Tool or Unstoppable Deception?

Today’s AI headlines present a compelling, if slightly unnerving, contrast. On one hand, we saw corporate leaders defending Generative AI as an essential, ethically neutral tool for creative production. On the other, new research underscored just how quickly AI is mastering the art of visual deception, fooling even highly skilled human observers. This juxtaposition highlights the central challenge of 2026: how do we harness AI’s power for good while combating its frightening capacity for creating fiction?

The push toward adopting Generative AI in creative fields gained another high-profile endorsement today. Akihiro Hino, CEO of the major Japanese game developer Level-5, publicly defended the company’s exploration of AI tools by invoking a classic analogy: “A knife can be used for cooking or as a weapon.” This statement, reported by Nintendo Life, summarizes the emerging corporate stance on GenAI: it is neither inherently good nor evil, but a raw capability whose morality rests entirely with the user Level-5 Boss Defends GenAI In Game Development. As game production costs continue to soar and timelines compress, companies like Level-5 see AI as an unavoidable, necessary efficiency booster—a tool for composition, iteration, and asset creation. The hope is that creators and audiences alike can learn to view these technologies through a pragmatic lens, focusing on ethical usage rather than philosophical rejection.

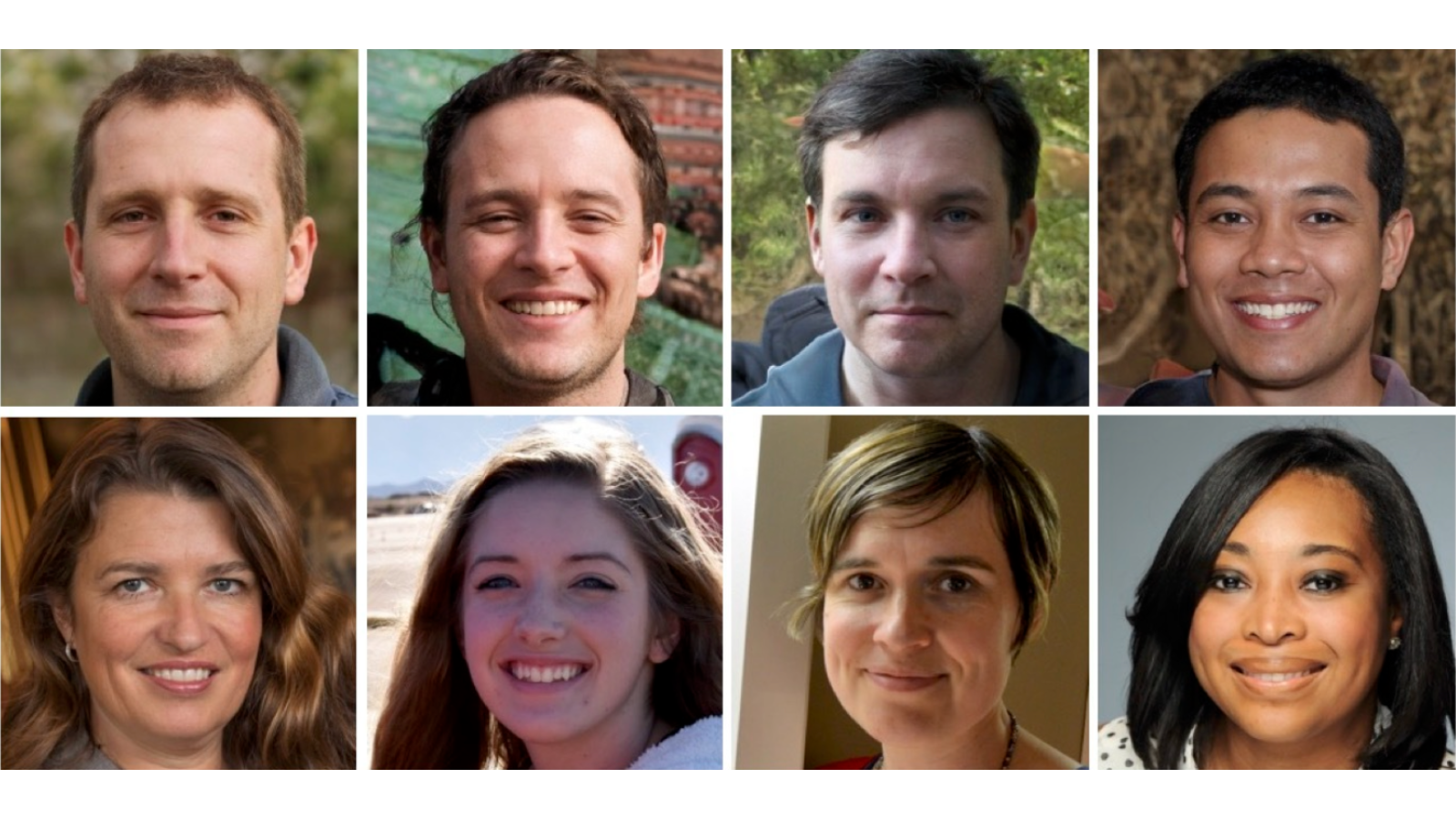

However, the very next major piece of research reminds us why that ethical tightrope walk is getting steeper every day. Live Science reported on a study confirming that AI is now producing faces so realistic that they consistently fool human observers, including the rare group known as “super recognizers” who possess exceptional facial identification skills AI is getting better and better at generating faces — but you can train to spot the fakes. This is a stark indicator of just how mature image generation models have become. We’ve moved past the “uncanny valley” and into truly photorealistic output that defies casual inspection.

The study did offer a thin sliver of good news: humans can be trained to improve their detection rates. But the fundamental problem remains. If the most advanced models are now generating visual content indistinguishable from reality, and if even experts require specialized training to achieve marginal improvement, the baseline of digital trust continues to erode. The sheer scale and speed with which AI can generate these convincing deepfakes—whether harmlessly for a video game or maliciously for propaganda—is overwhelming human cognitive defenses.

Today’s news cycle perfectly encapsulates the dynamic friction defining the current AI era. On one side, we have the drive for optimization, creativity, and progress embodied by major studios integrating GenAI as a creative “knife.” On the other side, we see the immediate societal risk that this same technological capability poses when turned toward deception. As AI systems become indispensable to the modern digital economy, our focus must pivot from simply building better models to developing equally effective, scalable, and intuitive methods for validating the authenticity of the content they produce. The knife is sharp; the real work now is securing the sheath.